Brool brool (n.) : a low roar; a deep murmur or humming

Julia

I’ve been playing around with Julia lately. Not for any particular reason, just to get a feel for it.

Why Julia instead of one of the new languages from established groups (i.e., Rust or Go)? Primarily because Go and Rust seem to be at a lower level – Julia “feels” very much like Python or Clojure, which in my book is a good thing.

There was an article in Wired that made the Julia creators seem either douchey or odd, depending on your interpretation of stuff – really, the “Language to End All Languages?” – but if you read the forums and whatnot, they are reasonable, and actually really smart guys.

The Julia creators have a heavy background in scientific computing, so there’s a strong emphasis on numerical accuracy and being able to effectively replace R / Matlab. Such a strong emphasis, in fact, that Julia starts the arrays at 1 instead of 0.

Yeah, yeah, I know. It doesn’t bother me that much (well, I’ve been doing a lot of Lua lately), but some people have a positively allergic reaction to it. But if you can get past that, then you find out that the language is really well designed.

Overall Syntax: Feels much like Python (for example, array

comprehensions look exactly the same), with a nice single line lambda

form (a -> a+1). Indentation is non-significant, blocks are terminated

with “end”.

It’s primarily an imperative language, so there are a fair number of

destructive operations (for example, push! to append an item to an

array), although it does have a set of functional calls as well –

you’ve got your map, reduce, so on and so forth. No currying / partial

application unless you write it out, though – i.e., given f(x,y), to

get a partially applied f you would have to do something like

y -> f(10, y).

Dictionaries are marked with “=>”, i.e.:

[ "This", "is", "an", "array" ] [ 0 => "This is a dictionary" ]They have an interesting take on {} vs. [] – {} marks an array or dictionary that has the most general type (i.e., Any), while [] contains an array or dictionary that has the most restricted type.

julia> [10,20] 2-element Array{Int64,1}: 10 20 julia> {10,20} 2-element Array{Any,1}: 10 20String concatenation is “*”. That feels weird to me (and precludes

Python’s surprisingly useful 10 * ' ' notation). Edit: Brought up

in the comments – you can use the exponentiation operator for this

(\^).

Types: No null – yeah, baby! That’s doing it right.

Well, you can have nullable types, but you have to explictly declare it

– i.e., Union(String, Nothing) for something that can accept a null

value in place of a string. Unfortunately there is not the level of

checking at the compile level that you would get in Ocaml, for example

– i.e., the Ocaml:

… will give you a warning on compile because you aren’t handling one of the cases, but the somewhat equivalent:

function slen(s :: Union(String, Nothing))

return length(s)

end

… will not. I don’t think there’s a way to do a sum type a la Ocaml (i.e., with discriminations)

Nonetheless, this is so awesome in principle that I am willing to forgive quite a bit in the language.

Ultimately the typing is looser than Haskell / Ocaml – for example, there is no way to specify, even with declarations, “this function takes a function that takes two integers.”

The language has no objects. This would have been a non-starter for me a few years ago, but after using Ocaml + Haskell + Clojure, it no longer bothers me. There is something called methods, but the methods of a function are the various type signatures that it will take for multiple dispatch.

Type declarations are optional, and the language will usually default to “Any” if you don’t declare one. There is a nice parameterized type mechanism in place, so something like:

type Point{T}

x :: T

y :: T

end

… declares a Point type that can be parameterized with different types

– i.e., Point{Float64}(0,0).

Macros: The language offers hygienic macros – you can deal with the source as an expression tree in a very Lispy way. They have some nice ones defined – @elapsed for timing, @profile to get profile results, and especially @pyimport to import a Python module and use it (!). Of course, the allure of macros is that you can use it to supplement the language in interesting ways; there are already a few macros that give you pattern matching like Haskell / Ocaml.

Performance: Well, I use Python, so obviously performance is not a first priority with me, but – the Julia guys are using a JITed LLVM underneath everything, so performance should be very good for the computationally bound stuff. This does add the overhead to JIT everything out the first time (or whenever a function is called with different types for parameters); to get around this they’re using caching of the base library, and they plan to allow caching of other libraries as well. Startup on my laptop is about 2 seconds, whereas Python is instant. AOT compilation should be possible but it’s not in there yet (although I’m not sure yet how they’re figuring out the needed signatures for all the functions – are they doing a full type induction during the compilation? Need to look at it).

The libraries aren’t tuned, yet, and there are quite a number of slow spots, but they “feel” like stuff that can easily be improved. For example, a lot of the stuff I do is text processing, so it involves line-by-line reading of large text files; Python has optimized that to the nth degree, so Python can read a text file substantially faster than Julia right now. There are some other weird performance anomalies – for example, this:

function alloc_test(n)

s = "" :: ASCIIString

for i = 1:n

s = s * " "

end

return length(s)

end

… runs much slower than the Python sorta-equivalent, and increases exponentially as n increases. It looks like there’s quite a bit of extra copying / checking for UTF8 going on. Good news is that something like:

function alloc_array(n)

s = Char[]

for i = 1:n

push!(s, ' ')

end

return length(s)

end

… runs quickly. The language has great promise in the performance front.

Concurrency: Julia has a nice set of concurrency primitives (although you don’t quite have the richness that you do with, say, Clojure). Threads are true threads, it is easy to dispatch threads and retrieve the value later, and there are some higher level constructs that let you (for example) solve multiple SVDs at once:

M = {rand(1000,1000) for i=1:10}

pmap(svd, M)

Very neat stuff. Even neater, though, is that Julia has the ability to easily dispatch to machines in a network cluster and share computations, in case you have a bank of machines and need to simulate the weather or crack a code or something.

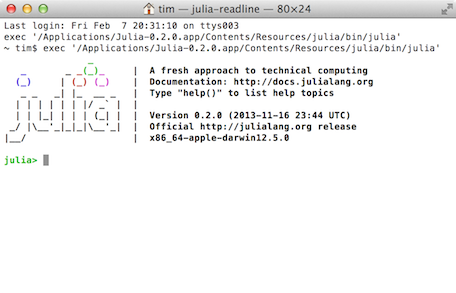

REPL: Julia has some nice capabilities at the REPL. You can

help(function) to get help, or methods(function) to see all the

dispatches available for a given function. There’s also @profile for

profiling, @elapsed for quick benchmarks, and a set of

code_typed/code_llvm/code_native to see the raw code that is getting

generated.

There are some weaknesses, as well. It was handy in Python to be able to

do dir("") to see the allowable methods on string, but there’s no such

equivalent in Julia – well, you don’t have a string object at all,

but there also does not seem to be a way to search every method

signature and return everything that takes a string. Edit: Mentioned

in the comments was methodswith() – so you could do

methodswith(String) or even methodwith(typeof("")).

No debugger yet, although there are people working on one.

There’s even Julia extensions for the IPython notebook so you can use the rich IPython interface to write Julia programs, and something like Gadfly for the plotting.

Bottom Line: So, what you have is a language in the early stages, but – smart BDFLs / community, easy integration with both C and Python, well designed language with the feel of Python and Clojure, good concurrency (death to the GIL), and the potential for excellent performance. I mean, what’s not to love? It’s a cool enough project that I plan on spending some time working in it – can always write documentation, if nothing else.

Discussion

Comments are moderated whenever I remember that I have a blog.